Hyperscale operations demand zero-latency parts availability. Manual forecasting can't keep pace with component failure rates.

Automated parts inventory workflows in data center operations connect BMC telemetry to demand forecasting, enabling predictive stock allocation across distributed facilities. API-driven replenishment reduces emergency shipments while maintaining SLA compliance through real-time availability tracking.

Engineers checking parts availability hit five different systems across regional warehouses. By the time they locate stock, SLA windows have narrowed and expedited shipping becomes the only option.

Demand forecasting runs on spreadsheets updated monthly. Server refresh cycles and unexpected failure clusters burn through safety stock before purchase orders clear, creating stockouts during critical maintenance windows.

Hardware generations overlap. Parts databases lack deprecation signals. Inventory managers discover obsolete stock only after annual audits, while engineers order duplicate parts because substitute matching requires tribal knowledge.

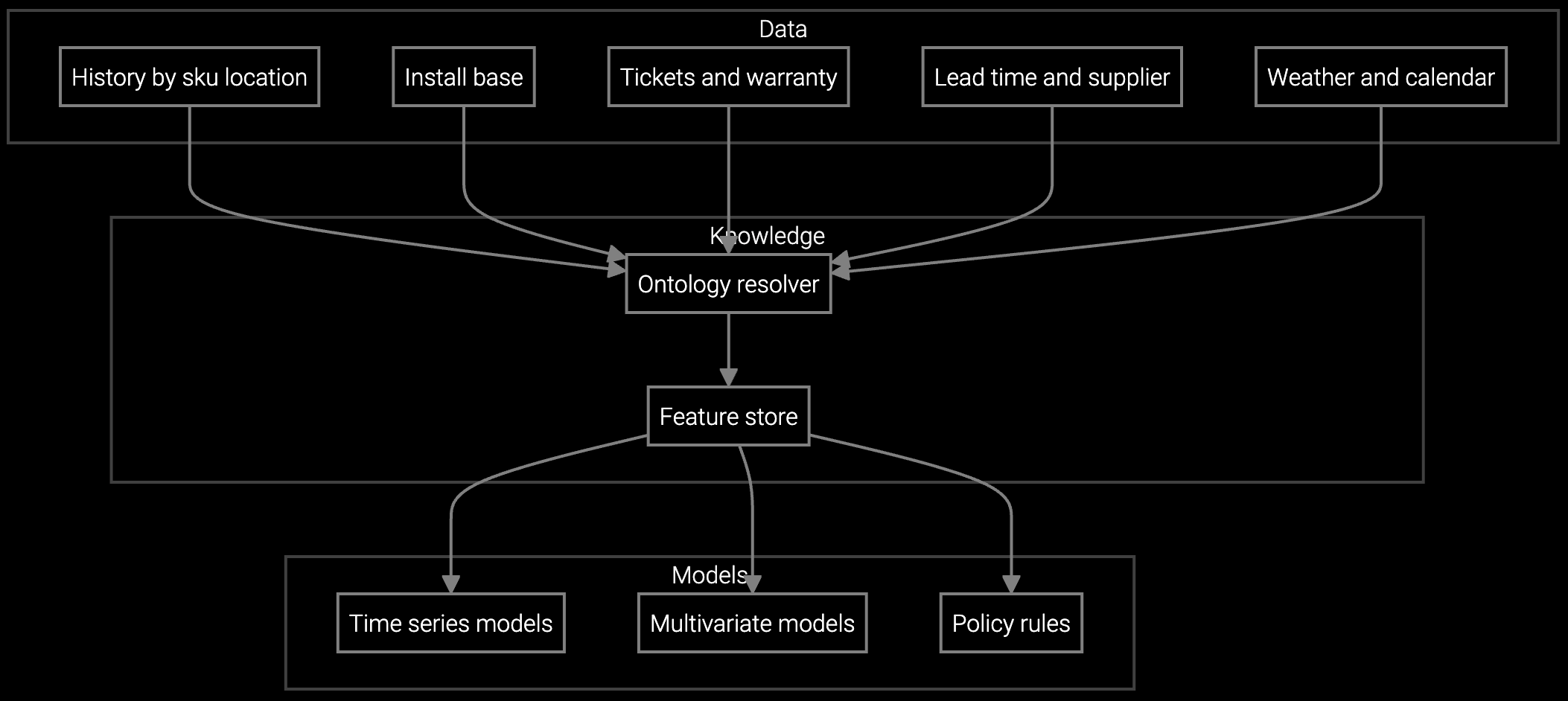

The platform consumes BMC and IPMI telemetry streams to detect failure signatures before hardware dies. Prediction models trained on historical RMA patterns trigger preemptive stock allocation to facilities with elevated risk profiles. When a power supply exhibits temperature drift or a RAID controller logs I/O errors, the system automatically reserves replacement inventory at the nearest warehouse.

API connectors to SAP and Oracle ERP systems execute replenishment workflows without manual intervention. Developers configure business rules via Python SDK—set reorder points by facility and component type, define substitute parts hierarchies, and override safety stock thresholds during refresh cycles. The platform exposes REST endpoints for parts availability queries, enabling engineers to check stock levels directly from ticketing systems or custom dashboards without navigating legacy UIs.

Forecasts server component demand by data center facility and time window, optimizing stock levels to meet 99.99% availability SLAs while reducing carrying costs tied to overstock.

Projects parts consumption based on installed server generations, usage telemetry from IPMI, and seasonal load patterns to prevent stockouts during peak maintenance cycles.

Engineers photograph failed components on-site; the platform identifies part numbers from visual markings and serial codes, then checks real-time availability across regional warehouses.

Data center OEMs manage inventory across geographically distributed facilities serving hyperscale operators with four-nines uptime SLAs. The platform ingests BMC telemetry from compute, storage, and power infrastructure to build failure probability models by hardware generation and deployment environment. Demand forecasts account for planned refresh cycles—when a hyperscale customer schedules a server upgrade, the system adjusts stock levels for decommissioned component returns and new-generation spares.

Substitute parts matching addresses hardware diversity. A customer running mixed Dell, HPE, and Supermicro servers needs generic components like power supplies and memory modules. The SDK enables developers to define compatibility matrices linking OEM-specific part numbers to interchangeable alternatives, ensuring engineers see all viable options during shortage scenarios without manual cross-referencing.

The platform provides REST API connectors and pre-built SDKs for SAP S/4HANA and Oracle Cloud ERP. Developers authenticate via OAuth 2.0 and configure webhook endpoints to receive inventory events. Purchase order creation, goods receipt posting, and stock transfer workflows execute through standard ERP APIs without custom middleware. Python and TypeScript SDKs include code samples for common integration patterns.

Yes. The Python SDK exposes model training pipelines and hyperparameter configuration. Data scientists upload historical failure data segmented by server model, deployment year, and operating environment. The platform supports scikit-learn and PyTorch model formats, allowing teams to build custom forecasting algorithms or fine-tune pre-trained models using their proprietary telemetry data.

The platform ingests BMC and IPMI telemetry capturing temperature, voltage, fan speed, and disk SMART metrics. It also consumes ERP transaction logs for historical demand patterns, warranty claim records, and planned maintenance schedules. Developers configure data ingestion pipelines using Apache Kafka connectors or batch uploads via REST API. Real-time streams enable predictive alerts; batch data trains baseline forecasting models.

The SDK includes a compatibility matrix editor where engineers map OEM-specific part numbers to generic equivalents. Define compatibility rules based on form factor, connector type, and performance specifications. The platform validates substitutes against installation base data to confirm cross-vendor compatibility. Once configured, engineers querying parts availability see all interchangeable options ranked by proximity and lead time.

Developers set override thresholds and approval workflows through the SDK. Configure rules like "flag any reorder recommendation exceeding 30-day historical average" or "require manager approval for stock transfers above $50K value." The platform logs all override events with justification notes, enabling retrospective analysis of model accuracy versus human judgment. Teams iteratively refine forecasting parameters based on override frequency.

SPM systems optimize supply response but miss demand signals outside their inputs. An AI operating layer makes the full picture visible and actionable.

Advanced techniques for accurate parts forecasting.

AI-driven spare parts optimization for field service.

Connect with our integration team to map your ERP APIs and telemetry streams.

Schedule Technical Demo