Hyperscale uptime demands mean every truck roll needs predictive parts loading and zero-touch job documentation.

Build field service workflows by connecting work order systems to telemetry analysis, automated parts prediction, and technician mobile apps via APIs—enabling dispatch-to-debrief automation that reduces truck rolls and increases first-time fix rates.

Technicians guess which drives, DIMMs, or power supplies to load. When BMC logs point to memory errors but the tech brings drives, you schedule a second visit and miss SLA windows.

Work orders lack IPMI telemetry, rack location, firmware versions, or thermal history. Technicians waste billable time calling back to NOC for basic context before starting repairs.

Manual job completion forms delay billing, lose failure mode data for reliability engineering, and create compliance gaps when technicians skip required fields under time pressure.

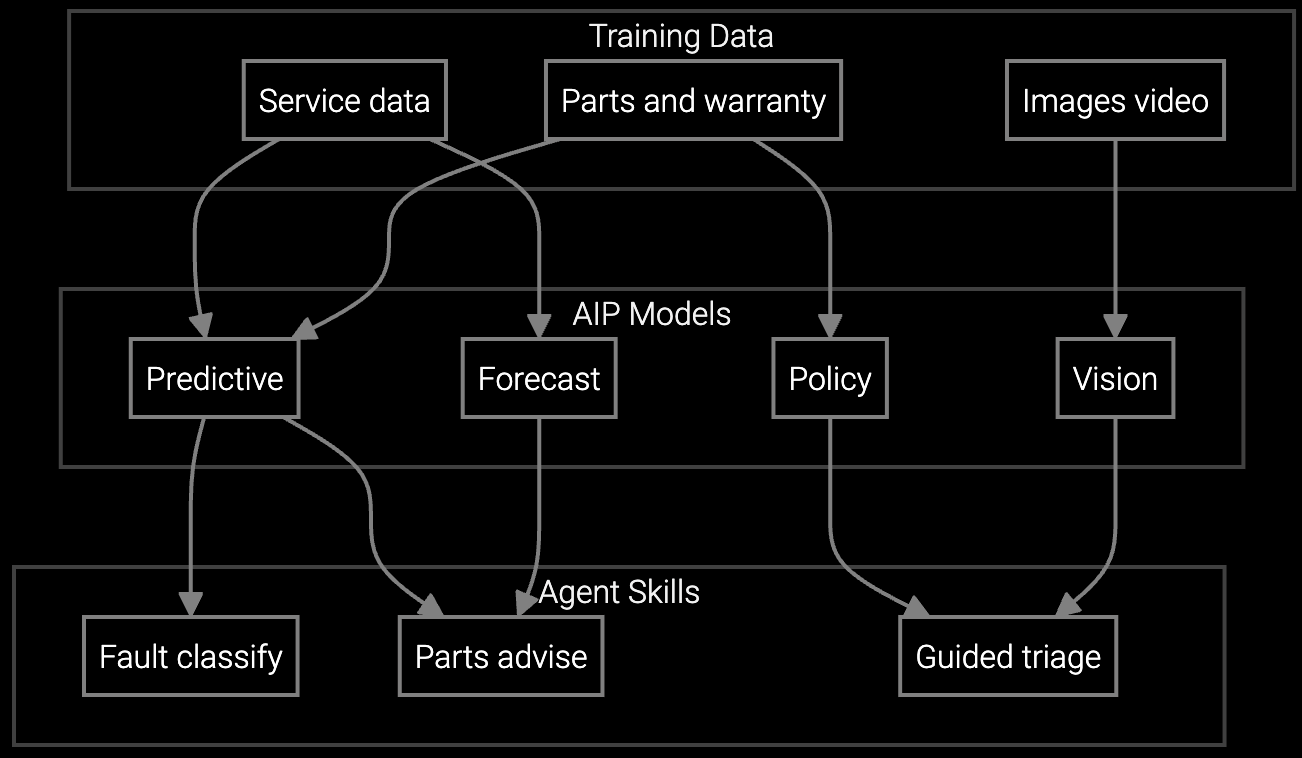

The platform exposes REST and GraphQL endpoints that connect FSM systems (ServiceMax, Oracle Field Service, custom dispatch tools) to AI services analyzing BMC/IPMI telemetry streams. When a work order triggers, the parts prediction API correlates fault codes with historical failure patterns to auto-populate a parts list on the technician's mobile device. Mobile SDKs (Python, TypeScript) let you embed guided diagnostics, auto-capture job photos, and push structured debrief data back to your ERP without custom ML pipelines.

Event-driven webhooks eliminate polling—when thermal anomalies cross thresholds or RAID arrays predict imminent failure, the platform auto-creates prioritized work orders in your FSM system with pre-staged context. Headless architecture means you own the workflow logic; the platform handles telemetry parsing, prediction model serving, and mobile data sync. Deploy on-prem or hybrid to keep sensitive BMC data within your VPC while using managed inference endpoints for compute-intensive tasks like image-based defect detection on replaced components.

Correlate BMC thermal alerts and DIMM error logs to predict which specific parts will fail, reducing wrong-part dispatch for UPS and cooling system repairs.

Mobile copilot surfaces rack-specific firmware versions, hot aisle thermal maps, and guided RAID rebuild procedures—eliminating NOC escalation calls during time-sensitive PDU swaps.

Parse years of IPMI logs and tribal knowledge to identify whether recurring server crashes stem from faulty DIMMs, BIOS bugs, or PUE-driven thermal stress patterns.

Data center equipment manufacturers face unique workflow complexity: thousands of compute nodes generating continuous IPMI streams, diverse hardware generations with different BMC schemas, and hyperscale customers demanding four-nines uptime SLAs. Your technicians service everything from legacy RAID controllers to liquid-cooled AI training clusters, each with distinct failure signatures buried in telemetry.

API integration unifies these streams. Connect your Oracle Field Service dispatch system to BMC analytics endpoints that parse thermal zones, memory errors, and power supply voltages in real time. When a work order triggers for a suspected drive failure, the parts prediction API cross-references SMART data with historical patterns from that specific server generation and rack position—accounting for hot aisle effects or firmware-specific bugs. Mobile SDKs push this context to technicians' tablets before they leave the depot, eliminating guesswork on DIMM types or PDU capacities. Automated debrief webhooks capture replaced part serial numbers, thermal readings, and job photos, feeding your reliability engineering database without manual data entry.

You need REST or GraphQL endpoints for work order creation (inbound from your FSM), a parts prediction API that accepts fault codes and returns ranked part lists, a mobile SDK for technician app integration, and webhook listeners for event-driven triggers like thermal threshold breaches. The platform provides OpenAPI specs and client libraries in Python and TypeScript for standard FSM integrations (ServiceMax, Oracle, custom SAP modules).

Use schema mapping adapters that normalize vendor-specific BMC formats (Dell iDRAC, HP iLO, Supermicro IPMI) into a common telemetry model. The platform's ingestion layer handles protocol differences and exposes normalized sensor readings (temperature, voltage, error counters) via GraphQL queries. You maintain mapping configs in version control and deploy updates without rebuilding prediction models when you introduce new server SKUs.

Yes, deploy the telemetry ingestion and feature extraction layer on-prem in your VPC, then send anonymized feature vectors (not raw BMC logs) to managed inference endpoints for prediction scoring. Alternatively, run the full stack on-prem using containerized model serving and only sync aggregated metrics to the cloud for model retraining. Headless architecture lets you draw the boundary based on your data residency requirements.

Mobile SDKs cache job context, guided procedures, and parts lists locally before dispatch. Technicians complete diagnostics and capture debrief data offline; the SDK queues updates and syncs when connectivity returns. Conflict resolution handles cases where NOC updates the work order while the technician is offline. You configure sync rules (immediate for critical failures, batch for routine jobs) via SDK initialization parameters.

Run a shadow pilot: configure webhook listeners to receive work order triggers from your FSM without auto-dispatching. Log parts predictions and compare to actual parts used by technicians over 30 days. Measure potential first-time fix improvement and truck roll reduction without changing live workflows. After validating accuracy on 100+ jobs, enable auto-population of parts lists on mobile devices for a single high-volume server SKU before expanding to storage and cooling systems.

How AI bridges the knowledge gap as experienced technicians retire.

Generative AI solutions for preserving institutional knowledge.

AI-powered parts prediction for higher FTFR.

Get API documentation and technical architecture guidance from Bruviti's integration team.

Request Developer Access