Hyperscale customers demand 99.99% uptime while your inventory carrying costs climb with every new server generation.

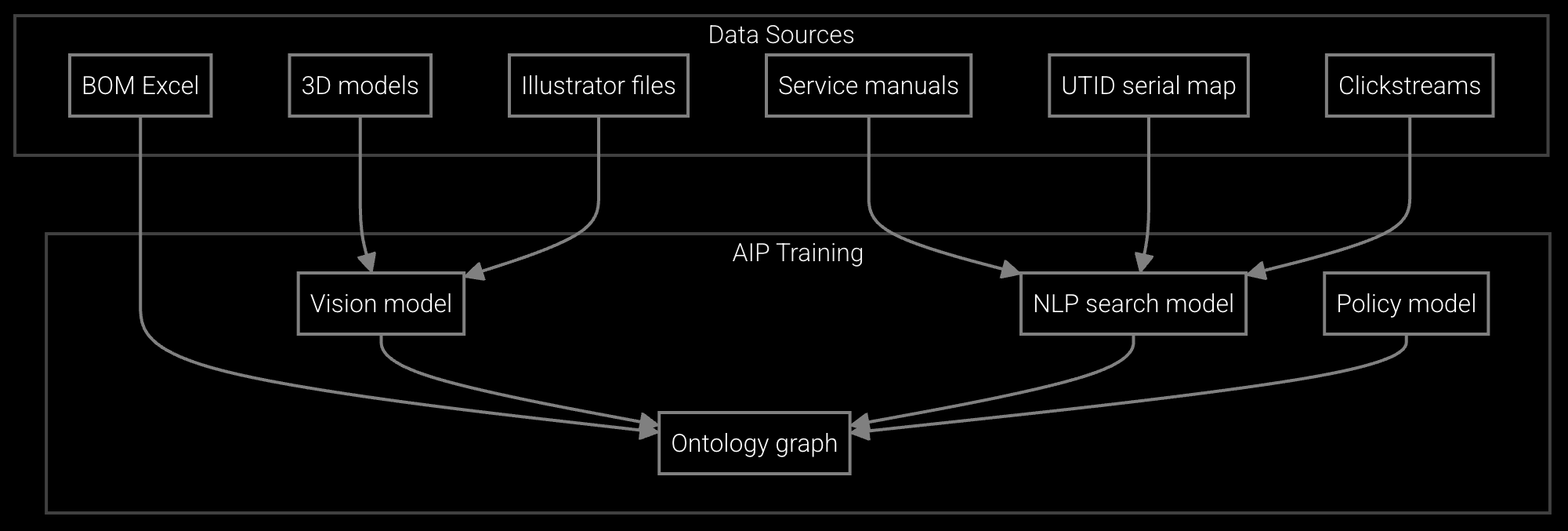

Data center OEMs face a strategic choice: build predictive inventory capabilities in-house or adopt AI platforms. The hybrid approach combines API-first customization with pre-trained models, delivering faster time-to-value without vendor lock-in while maintaining control over proprietary forecasting logic.

Multi-generation server fleets require overlapping parts inventories. SSDs, DIMMs, and power supplies proliferate across SKUs while hyperscale customers demand instant availability.

Component lifecycles shorten as DDR5 replaces DDR4 and NVMe generations turn over. Forecast too conservatively and miss SLAs. Forecast too aggressively and write off excess stock.

When the exact DIMM is unavailable, which alternatives maintain compatibility without voiding warranties? Manual lookups delay fulfillment and introduce substitution errors.

Building predictive inventory from scratch requires ML engineers, data scientists, and 18+ months before the first forecasting model reaches production. Buying traditional software locks you into rigid workflows that cannot adapt to your proprietary installed base data or unique warranty terms.

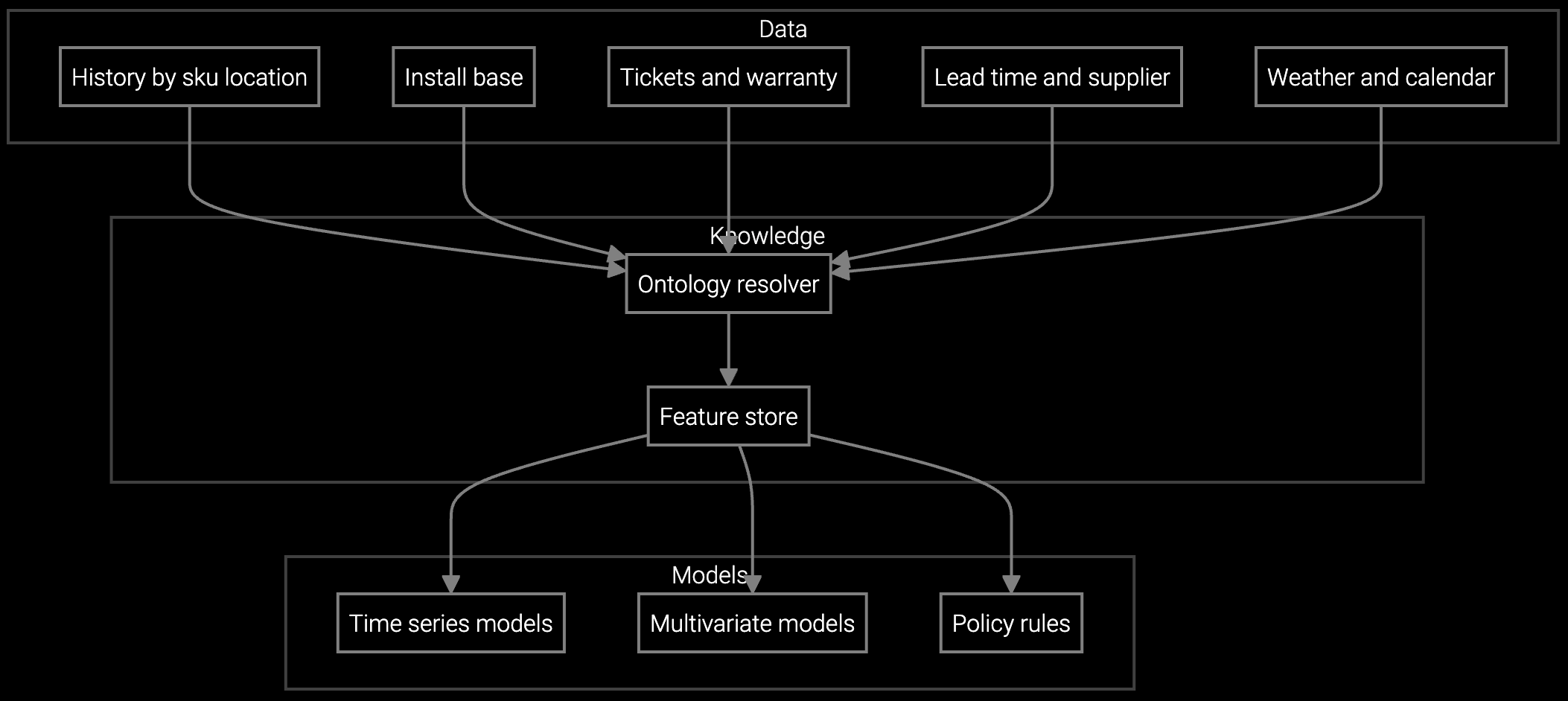

The platform approach delivers pre-trained models for demand forecasting and substitute parts matching while exposing APIs that let your team customize logic, integrate with SAP or Oracle ERP systems, and retain control over competitive differentiation. Deploy foundational capabilities in weeks, then extend with your own rules for multi-location optimization or EOL transition planning. No vendor lock-in, no starting from zero.

Project DIMM and SSD consumption by data center region based on server age, MTBF curves, and seasonal hyperscale expansion cycles.

Optimize stock levels across geographically distributed warehouses supporting hyperscale customers with four-hour SLA requirements.

Automatically maintain substitute parts compatibility matrices as NVMe generations evolve and DDR5 variants proliferate.

Hyperscale cloud providers evaluate OEM partners on uptime guarantees and replacement part availability. When AWS or Azure negotiates SLAs, your inventory responsiveness becomes a competitive wedge. The shift to liquid cooling and 400G networking accelerates component diversity, multiplying SKU counts while shortening forecasting horizons.

OEMs that deploy AI-driven inventory optimization now gain 12-18 months advantage over rivals still relying on spreadsheet-based reorder points. This window matters as customers increasingly benchmark OEM service capabilities when selecting server suppliers for new data center buildouts.

Data center OEMs typically observe measurable carrying cost reduction within 90 days when starting with high-turnover components like memory and storage. Full ROI—including stockout prevention and obsolescence reduction—materializes in 6-9 months as forecasting models incorporate seasonal hyperscale expansion patterns and component lifecycle curves.

API-first platforms eliminate lock-in by exposing customization endpoints and allowing ERP integration without middleware dependencies. Unlike monolithic software that embeds proprietary forecasting logic you cannot modify, open integration architectures let you switch providers or bring capabilities in-house as your team matures without losing historical model training or business rules.

Building from scratch requires 18-24 months and specialized ML talent focused on time-series forecasting and substitute parts matching. This timeline creates competitive risk if rivals deploy faster. The strategic question is whether your differentiation lies in inventory algorithms or in leveraging superior inventory availability to win customer contracts. Most OEMs find greater return from domain-focused AI platform adoption that accelerates deployment while preserving customization control.

Predictive models ingest installed base age distribution, component lifecycle data, and historical transition curves to forecast demand decline for legacy parts while ramping newer SKUs. The platform flags obsolescence risk 6-9 months ahead, allowing gradual inventory drawdown synchronized with customer refresh cycles rather than abrupt write-offs when EOL notices arrive.

BMC and IPMI telemetry provide real-time failure signals for power supplies, fans, and storage. Warranty claim histories reveal component-specific MTBF patterns. Customer deployment data shows geographic concentration and usage intensity. Combining these sources improves forecast accuracy 30-40% versus reorder-point methods, especially for predicting regional demand spikes during hyperscale expansion phases.

SPM systems optimize supply response but miss demand signals outside their inputs. An AI operating layer makes the full picture visible and actionable.

Advanced techniques for accurate parts forecasting.

AI-driven spare parts optimization for field service.

See how leading data center OEMs balance speed, control, and competitive differentiation with Bruviti's platform approach.

Schedule Strategic Review